Kaspersky’s Digital Footprint Intelligence service have found nearly 3000 dark web posts mainly discussing use of ChatGPT and other large language models (LLMs) for illegal activities. Threat actors are exploring schemes, from creating nefarious alternatives of the chatbot to jailbreaking the original and beyond. Stolen ChatGPT accounts and services offering their automated creation en masse are also flooding dark web channels, reaching another 3000 posts.

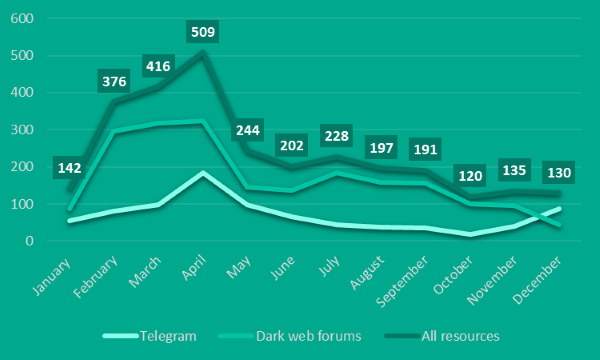

In 2023, Kaspersky Digital Footprint Intelligence service discovered nearly 3000 posts on the dark web, discussing the use of ChatGPT for illegal purposes or talking about tools that rely on AI technologies. Even though chatter peaked in March, discussions persist.

The dynamics of dark web discussion about the use of ChatGPT or other AI tools. Source: Kaspersky Digital Footrpint Intelligence

“Threat actors are actively exploring various schemes to implement ChatGPT and AI. Topics frequently include the development of malware and other types of illicit use of language models, such as processing of stolen user data, parsing files from infected devices, and beyond. The popularity of AI tools has led to the integration of automated responses from ChatGPT or its equivalents into some cybercriminal forums. In addition, threat actors tend to share jailbreaks via various dark web channels – special sets of prompts that can unlock additional functionality – and devise ways to exploit legitimate tools, such as those for pentesting, based on models for malicious purposes,” explained Alisa Kulishenko, digital footprint analyst at Kaspersky.

Apart from the chatbot and artificial intelligence mentioned, considerable attention is being given to projects like XXXGPT, FraudGPT, and others. These language models are marketed on the dark web as alternatives to ChatGPT, boasting additional functionality and the absence of original limitations.

Stolen ChatGPT accounts for sale

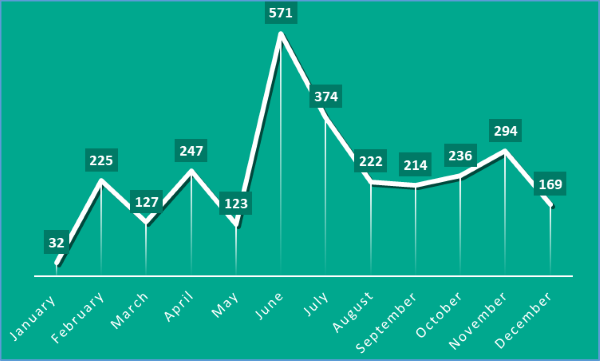

One more threat for users and companies is the market for accounts for the paid version of ChatGPT. In 2023, another 3000 posts (in addition to the previously mentioned ones) advertising ChatGPT accounts for sale were identified across the dark web and shadow Telegram-channels. These posts either distribute stolen accounts or promote auto-registration services massively creating accounts on request. Notably, certain posts were repeatedly published across multiple dark web channels.

The dynamics of dark web posts offering stolen ChatGPT accounts or auto-registration services. Source: Kaspersky Digital Footrpint Intelligence

“While AI tools themselves are not inherently dangerous, cybercriminals are trying to come up with efficient ways of using language models, thereby feeling a trend of lowering the entry barrier into cybercrime and, in some cases, potentially increasing the number of cyberattacks. However, it’s unlikely that generative AI and chatbots will revolutionise the attack landscape – at least in 2024. The automated nature of cyberattacks often means automated defenses. Nonetheless, staying informed about attackers’ activities is crucial to being ahead of adversaries in terms of corporate cybersecurity”, added Kulishenko.

Detailed research is presented on the official Kaspersky Digital Footprint Intelligence website.